A children's health professor at the University of Liverpool received a bizarre email in August 2025: "Are you aware you're on TikTok?"

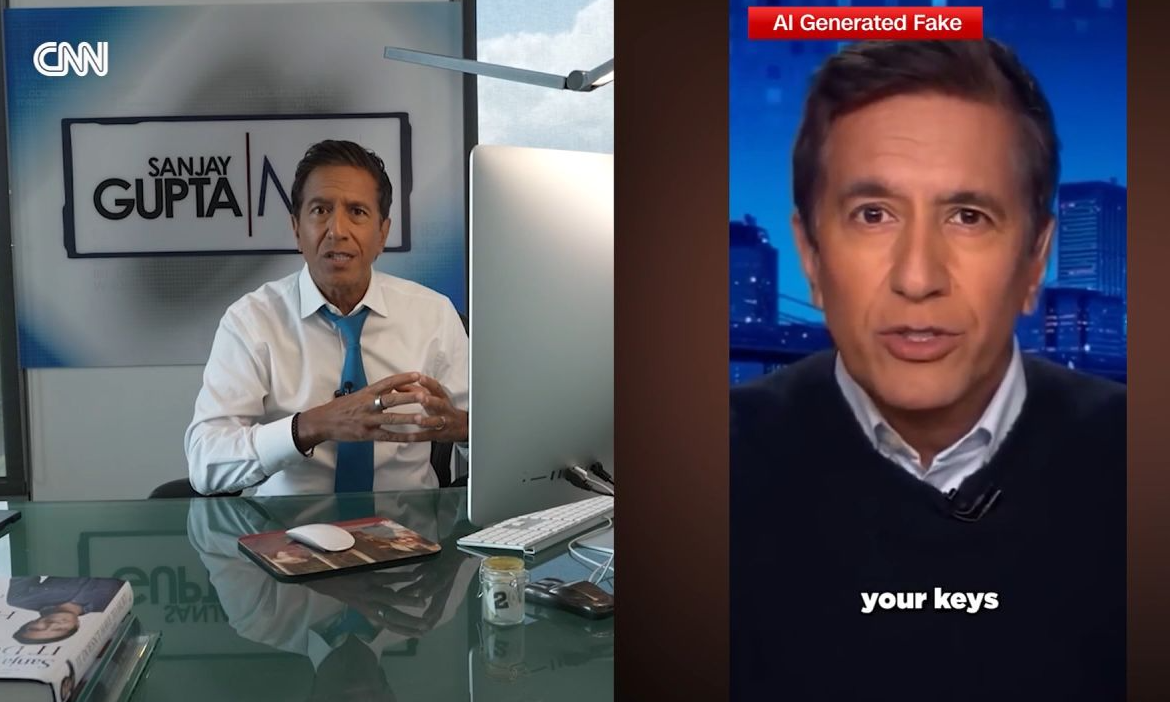

The professor had never posted there. Yet dozens of videos showed him discussing "thermometer leg," a fabricated menopause symptom, and directing viewers to buy supplements. The footage came from a real medical talk — face and voice subtly manipulated by AI to sell products he'd never heard of.

Full Fact's investigation uncovered hundreds of similar videos across TikTok, Instagram, Facebook, and YouTube, all featuring real doctors and academics unwittingly hawking Wellness Nest supplements. Some physicians appeared in 14 separate deepfakes. CBS News found over 100 fraudulent doctor videos across platforms, many viewed millions of times.

These scams don't sell fake products. They sell fake authority — borrowing credibility from real professionals to move inventory.

Trust is expensive to build and cheap to steal. There's a category to build here: impersonation monitoring and takedown operations for professionals whose reputation equals income.

Platform economics favor fraud at scale

Health scams already favor criminals because buyers are emotional and claims are hard to police at scale. Deepfakes have industrialized the model by making high-quality impersonation accessible to anyone.

ESET researchers identified over 20 TikTok and Instagram accounts using AI-generated doctors to push supplements. Media Matters documented eight TikTok accounts running deepfake doctor schemes, while Full Fact found that impersonated academics included Prof. David Taylor-Robinson (14 videos), Duncan Selbie (8 videos), and even the late Dr. Michael Mosley. Dr. Joel Bervell, a medical influencer with a substantial following, discovered deepfakes of himself promoting products "96% more effective than Ozempic" across TikTok, Instagram, Facebook, and YouTube.

Cosmetic chemist Javon Ford demonstrated that the Captions app's AI avatars — originally designed for creators — enable anyone to generate convincing medical deepfakes in minutes. One deepfake account posed as a gynecologist with "13 years of experience," traced directly to an app's avatar library.

Platforms aren't structurally motivated to fix it fast. Reuters obtained internal Meta documents showing the company projected ~10% of 2024 revenue (~$16 billion) came from ads tied to scams and banned goods. Meta's systems displayed an estimated 15 billion "higher-risk" scam ads daily, according to the documents. The company only bans advertisers when automated systems predict at least 95% certainty of fraud. Below that threshold, suspected scammers pay higher ad rates but keep running campaigns.

Internal enforcement teams faced revenue guardrails limiting them to actions costing no more than 0.15% of total revenue — about $135 million in early 2025. A manager overseeing anti-scam efforts wrote: "Let's be cautious. We have specific revenue guardrails."

Meta's own May 2025 internal presentation estimated that Meta platforms were involved in roughly one-third of all successful scams in the United States. A British regulator found Meta's products were involved in 54% of 2023's payment-related scam losses — more than all other social platforms combined.

U.S. senators have pushed for probes into scam and deepfake ads running on Meta's platforms. TikTok removed the thermometer leg video six weeks after Prof. Taylor-Robinson complained, initially deeming some violations acceptable. When contacted by CBS News about flagged videos, TikTok and Meta removed policy-violating content, but YouTube said the videos provided didn't violate Community Guidelines and would remain on the platform. Without structural changes to platform economics, the volume of fraudulent content will continue to grow.

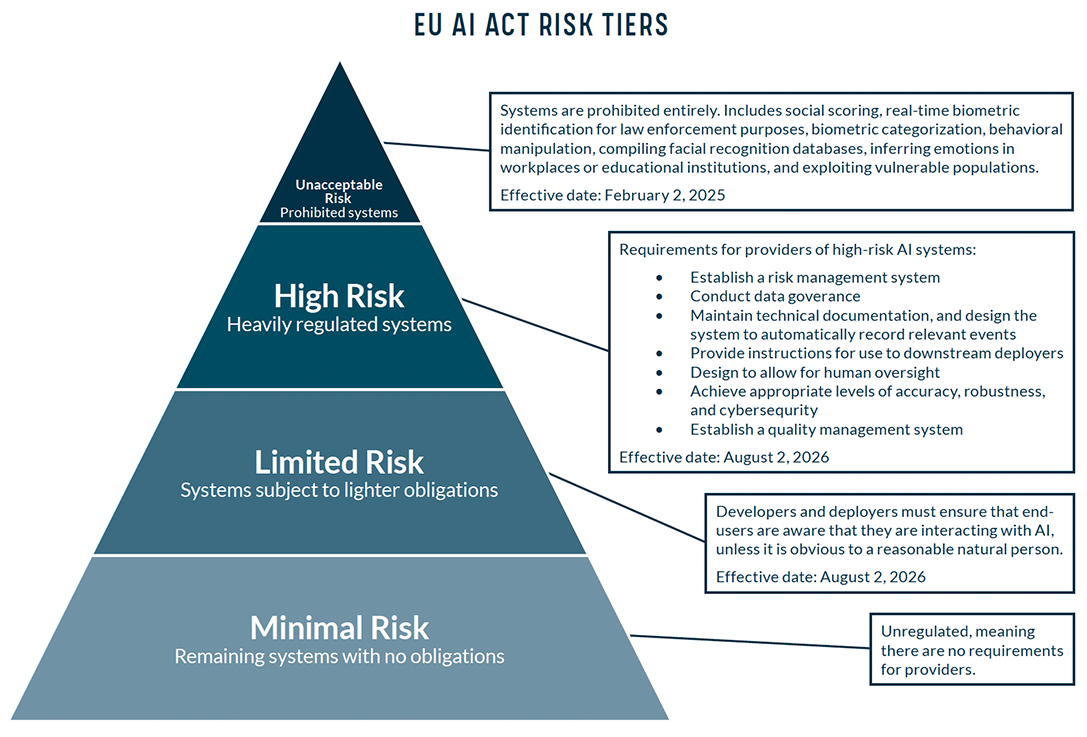

New regulations require documented identity verification

The EU AI Act's transparency obligations — including for synthetic and deepfake content — are pushing the ecosystem toward disclosure and traceability norms. California's AB-3211, under consideration, would require device makers to attach provenance metadata to photos and online platforms to disclose provenance metadata. If passed, it takes effect in 2026.

The U.S. Federal Trade Commission proposed new protections in February to combat AI impersonation of individuals. Multiple states have enacted legislation criminalizing nonconsensual deepfakes intended to spread misinformation.

Organizations that handle professional credentials will increasingly need documented processes for verifying identity and flagging impersonation. They'll pay for tools that provide both.

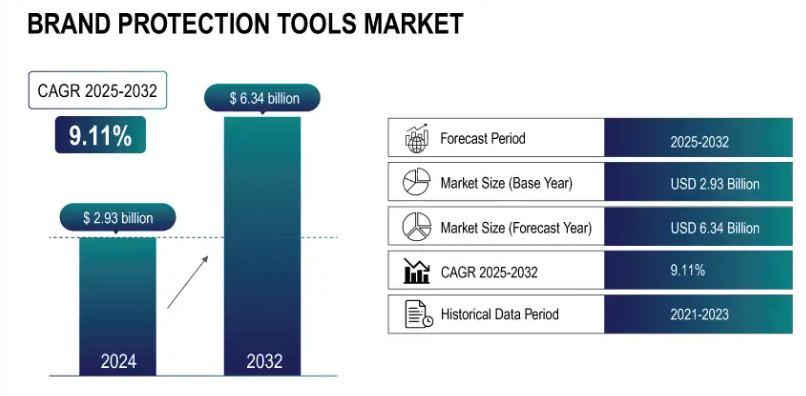

Brand protection is a $3B market — but no one serves individuals

Brand protection tools are experiencing explosive growth, driven by digital impersonation and fraud. The global brand protection tools market was valued at roughly

$3 billion in 2024 and is projected to reach $6.34 billion by 2032, growing at a CAGR of 9.11%.

Social media monitoring and brand reputation management tools — the closest analog to what we're proposing — are anticipated to grow at the highest CAGR of 11.92% from 2025-2032, as brand activity on social media increases and the risk of impersonation, fake reviews, and viral disinformation grows.

The U.S. market alone was valued at $0.85 billion in 2024 and is expected to reach $1.68 billion by 2032. Americans lost more than $12.5 billion to fraud in 2024, up 25% from 2023, according to the FTC. Social media platforms played an outsized role, with losses from scams initiated on these platforms hitting $1.9 billion in 2024.

Enterprise brand protection vendors already sell "impersonation takedowns" and broad monitoring — Red Points, MarqVision, ZeroFox. Red Points handles and examines over 2.7 billion data points monthly to identify and eliminate counterfeit listings, supporting more than 1,300 brands worldwide.

A solo endocrinologist, therapist, or CFP doesn't want an enterprise suite priced for Fortune 500 companies. They need a focused product: monitoring for impersonation, evidence generation for takedowns, and someone to handle the administrative chase across platforms. The pricing delta between enterprise solutions ($50k-$200k annually) and what an individual professional will pay ($600-$12k annually) creates room for a new product category.

The product: Trust Security OS

Build a LifeLock-style service for high-trust professionals: doctors, therapists, attorneys, financial advisors, academics, and credible creators. The product is workflow automation for reputation defense — not a deepfake detection science project.

The sharpest wedge is paid ads and landing pages. Start where impersonation has the highest intent and harm, where ROI is easiest to justify, and where you can build repeatable takedown playbooks. Generalized deepfake discovery across all organic content is harder to scope and harder to sell.

Core module 1: Continuous detection

Start where the data is easiest and most valuable:

Unlock the Vault.

Join founders who spot opportunities ahead of the crowd. Actionable insights. Zero fluff.

“Intelligent, bold, minus the pretense.”

“Like discovering the cheat codes of the startup world.”

“SH is off-Broadway for founders — weird, sharp, and ahead of the curve.”