A newspaper is supposed to be the last place on earth where rough drafts survive contact with the public.

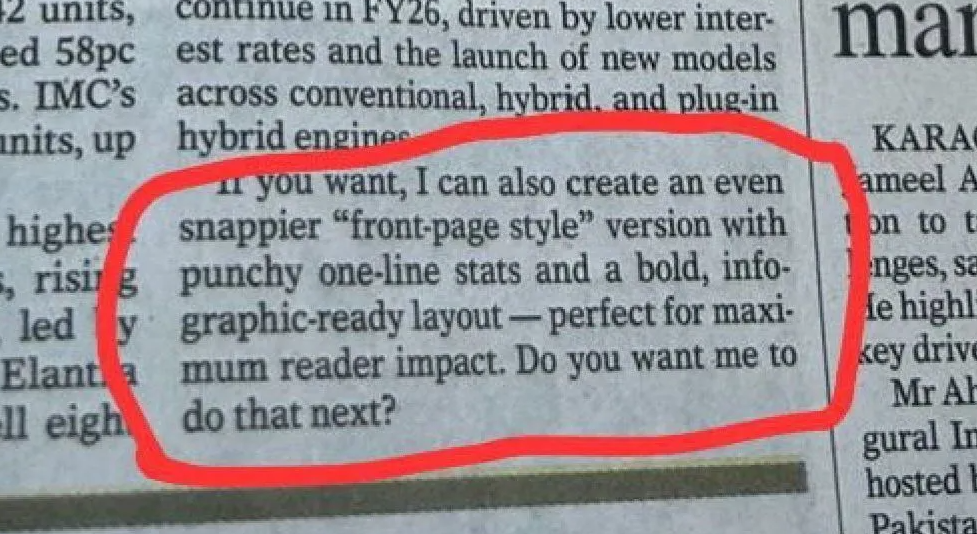

On November 12, 2025, Pakistan's Dawn—the country's most respected English-language daily—ran a business story about automobile sales. The print edition ended with a sentence that doesn't belong in journalism: "If you want, I can also create an even snappier 'front-page style' version with punchy one-line stats and a bold, infographic-ready layout perfect for maximum reader impact. Do you want me to do that next?"

A workflow leak, plain and simple. Someone used AI to punch up the copy. Someone else assumed it had been checked. The content moved from Doc to CMS to layout to print, bypassing the last-mile "are we about to embarrass ourselves?" gate that apparently didn't exist.

Dawn issued an apology calling the prompt "junk," admitted it violated their own AI policy, and launched an internal investigation. Screenshots circulated on Reddit, X, and LinkedIn. The comments ranged from mockery to genuine concern about journalism's credibility in the AI era.

AI doesn't wreck your brand. A screenshot does. A single visible artifact triggers the question: "If they missed that, what else are they missing?" That's why the market for pre-publish safety software could hit $10K/month per enterprise customer—because one embarrassing incident costs more than a year of prevention.

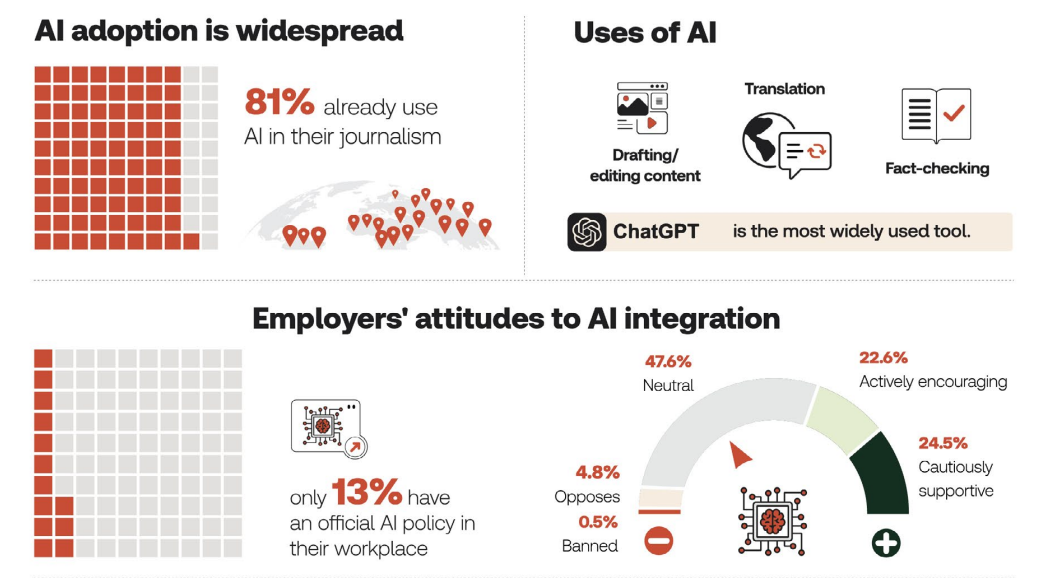

Mainstream adoption meets zero controls

Dawn's mistake was publishing without controls. In a Thomson Reuters Foundation survey of 200+ journalists across 70 countries, 81.7% reported using AI tools in their work, with 49.4% using them daily. That's mainstream behavior, yet only 13% of newsrooms have a formal AI policy. Another 79.1% reported no clear company policy at all.

High adoption meets zero controls in workflows that assume human review at every step. When a junior editor is slammed, when a CMS bug auto-publishes, when someone clicks through without reading—that's when AI artifacts slip into print.

Once you're a screenshot, you're a case study.

The catalog of AI publishing failures

Dawn's prompt leak is one failure mode in a growing catalog:

Prompt remnants: Internal AI instructions that make it to publish ("If you want, I can also...")

Fabricated citations: In May 2025, both the Chicago Sun-Times and The Philadelphia Inquirer published summer book lists packed with AI-invented titles that don't exist. The lists came from a syndicated partner and slipped through without fact-checking—forcing both papers to issue corrections and update their editorial policies.

Invented quotes: Quotes that got "improved" and smoothed out by AI until they no longer match what anyone actually said.

Orphan statistics: Numbers, percentages, and claims with no linked source—plausible-sounding but with no provenance.

Tone drift: AI smoothing out quotes into sanitized corporate-speak, losing the speaker's voice.

Policy violations: Content that bypasses disclosure requirements because the workflow doesn't enforce them.

These are production incidents happening right now, across newsrooms of every size.

Build the pre-publish firewall

The product: a "bullshit firewall" SaaS that scans drafts before publish and flags AI artifacts, unsourced claims, policy violations, and reputational risks—then enforces your AI policy automatically.

This is operational quality control—the boring, unsexy layer that makes AI-assisted publishing safe enough to scale. The key is where it runs:

Unlock the Vault.

Join founders who spot opportunities ahead of the crowd. Actionable insights. Zero fluff.

“Intelligent, bold, minus the pretense.”

“Like discovering the cheat codes of the startup world.”

“SH is off-Broadway for founders — weird, sharp, and ahead of the curve.”