Every compliance team is having the same nightmare right now.

Employee #1: "We need to use LLMs to summarize patient notes, analyze support tickets, and draft legal briefs."

Employee #2 (Legal): "Great. Strip every name, date, and identifier before it leaves the building."

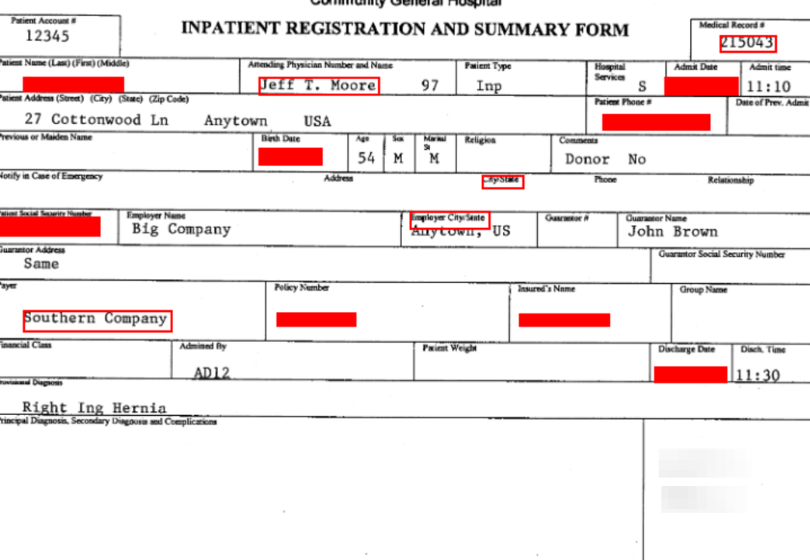

So the team does the "compliant" thing and redacts:

"John Smith contacted Dr. Patel on March 3rd about his mother Jane's medication exception approved by Dr. Chen..."

becomes:

"[REDACTED] contacted [REDACTED] on [REDACTED] about [REDACTED] medication exception approved by [REDACTED]..."

Problem solved, right?

Wrong. You just turned a coherent medical record into oatmeal. The LLM can't track who did what, who reports to whom, or what happened when. Your "safe" prompt is now useless noise.

Teams face two bad options: send raw identifiers and pray, or destroy so much context that the model returns garbage.

The Opportunity

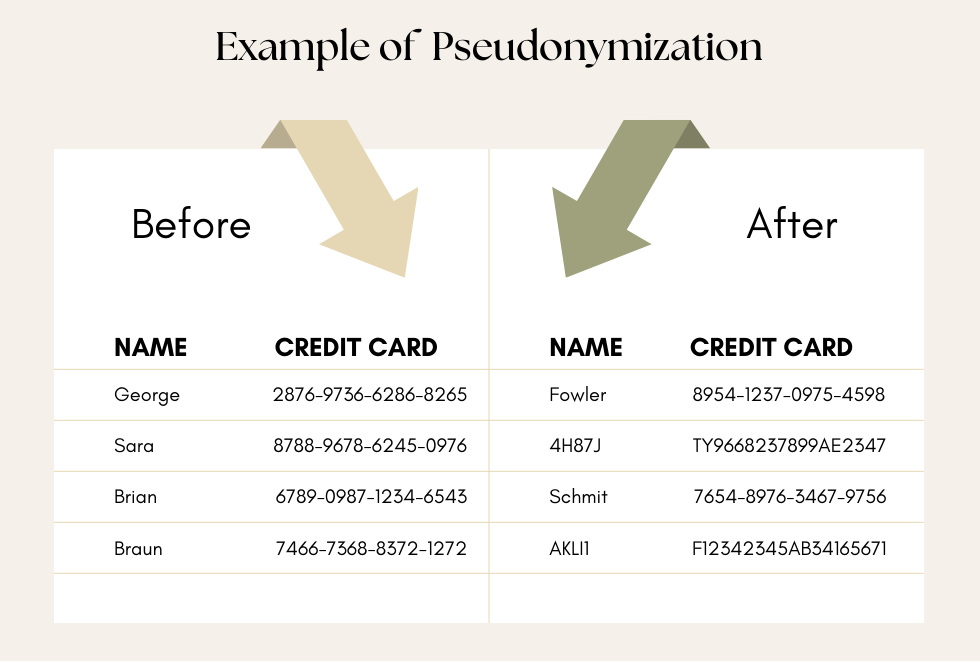

Build an LLM-native governance control plane that pseudonymizes PII while preserving relationships. Start with one vertical—healthcare ops is strongest—and ship policy-controlled pseudonyms instead of context-destroying redaction. Mid-market teams pay $2,500-$8,000/month. Enterprise contracts run $60k-$250k/year. You become required infrastructure written into compliance policy.

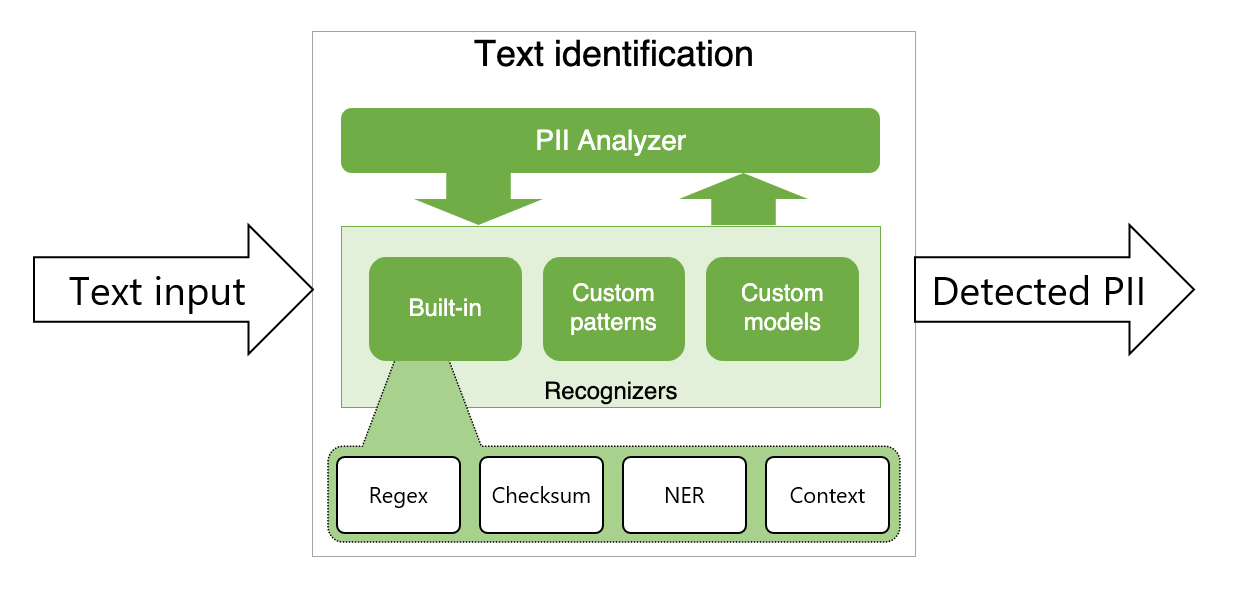

Microsoft's Presidio (an open-source PII detection framework) handles detection. LiteLLM (30k+ GitHub stars) already documents PII masking via Presidio integration. The "proxy + redaction" pattern is spreading fast.

But here's the gap: most implementations stop at removal. They find "John Smith" and delete it. Every time. They don't map PERSON_17 consistently across a 47-message email thread. They don't preserve manager approved employee's request relationships. They don't enforce tenant-wide vs session-only scope policies. They don't produce HIPAA Safe Harbor compliance evidence packs.

That's the opportunity. Not better detection—detection is commoditizing. The opportunity is Auth0 for safe context under HIPAA/GDPR-grade governance.

Why Now

LLM adoption is outpacing governance maturity by 18 months

Companies rushed to deploy ChatGPT wrappers. Compliance teams are now catching up. HIPAA de-identification guidance has existed for decades, but LLM workflows (chat, agents, RAG, summarization) turned "minimum necessary" from a policy memo into a day-to-day engineering constraint.

HHS provides two HIPAA de-identification methods: Safe Harbor (remove 18 specific identifier types) and Expert Determination (statistical analysis proving "very small" re-identification risk). Both were designed for static datasets, not streaming conversational AI where context window matters.

The "LLM proxy + PII masking" pattern is already emerging

LiteLLM documents PII/PHI masking via Presidio. AWS Bedrock Guardrails support PII detection and masking. Lasso Security and Pillar Security offer PII masking with selective blocking. Private AI provides enterprise DLP for LLM prompts.

AI platform teams already understand they need something between users and models. The market is warmed up. Early adopters are duct-taping solutions. You're not inventing demand—you're shipping the sharper, LLM-native version of patterns customers already half-built.

The gap: governance, not detection

Open-source tools handle detection reasonably well. Presidio offers 20+ entity recognizers (names, SSN, phone, email, medical record numbers). Azure AI Language, AWS Comprehend, and others provide similar capabilities.

What's missing is LLM-native reversible pseudonymization that preserves role/relationship structure across conversations as a governance control plane.

What enterprises actually pay for:

Unlock the Vault.

Join founders who spot opportunities ahead of the crowd. Actionable insights. Zero fluff.

“Intelligent, bold, minus the pretense.”

“Like discovering the cheat codes of the startup world.”

“SH is off-Broadway for founders — weird, sharp, and ahead of the curve.”